Azure AI Content Understanding: Comprehensive Guide and Reference

Overview :

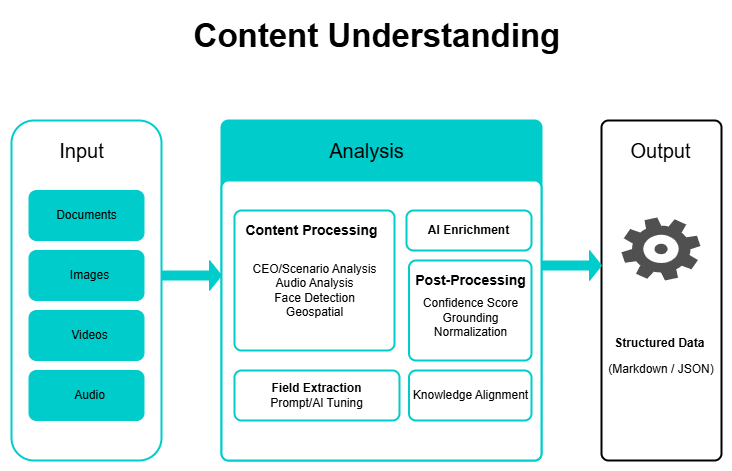

Azure AI Content Understanding is a cloud-based service that helps organizations manage large volumes of unorganized data without manually going through each file. It pulls out important information from various types of content and arranges it into a clear, structured format, making it easier to work with and analyze.

The key feature of this service is that it does not require knowledge of AI and prompt engineering, so it automates workflows to make the entire process fast and efficient. Its services integrate with Azure’s ecosystem, so it easily connects with other tools of Microsoft like Azure AI Foundry, Azure OpenAI, and Microsoft Power Platform.

Purpose :

The purpose of this service is to:

- Convert raw or unorganized data into structured and organized format that can be helpful in the automation and data analytics.

- Handle different types of input such as audio ,text, images,videos for a diverse business applications.

- Support simplicity by removing the need for build custom models training or prompt engineering.

- Enable intelligent document processing (IDP), media asset management, and conversational analytics.

Key Features :

- Multimodal Data Ingestion:

- Processes different types of data such as text,documents,images,audio and videos.

- Pulls out the important information through OCR to convert everything into machinable readable format.

- Field Extraction and Schema Inference:

- Users can define rules or templates for extracting specific information such as invoices data,Contract clauses or sentimental content analysis.

- Without requiring complex pro

- mpts or prompts engineering, It can be used to generate new ideas

- Standard and Pro Modes:

- Standard Mode: Reliable for the simple uses case e.g. information extracting from document.

- Pro Mode: Advanced and Designed for complex sceanrios. Handle multi document at once and also support multi-step logic reasoning. Connect with the external knowledge bases to handle complex tasks e.g. cross-referencing information. Currently, these features are limited to document inputs but plans to extend these features to other inputs in future.

- Data Enrichment:

- Includes features like layout element recognition, speaker diarization in audio, and face detection, enhancing the quality of extracted data.

- Content Filtering:

- Having a built in system to filter out the harmful content e.g. hate speech or violent content from being displayed. These filters have the option tosuch contents by adjusted their labels rather than blocking it completely.

- Face Capabilities:

- Supports face detection and recognition, including building a person directory for identifying individuals in images or videos. Access to these features is restricted to Microsoft-managed customers and requires registration.

- APIs and Integration:

- Offers REST APIs for easy integration into existing applications and workflows.

- Compatible with Azure AI Foundry for no-code experiences and tools like Power Automate for automated workflows.

Release History :

- Initial Public Preview: November 19, 2024, with API version 2024-12-01-preview the first version of these services are publicly available by introducing standard mode and basic multimodal support.

- Major Update: May 1, 2025, with API version 2025-05-01-preview added Pro Mode advanced level that supports multi-file processing and external knowledge base support like cross referencing.

- Additional Updates:

- May 19, 2025: Made improvements in document processing by adding new file format support (docx, xlsx, pptx, msg, eml, rtf, html, md, xml).

- June 19, 2025: Improved video processing with 50% lower latency and support for video chapters via segmentation.

- July 15, 2025: Expanded REST API documentation and quickstart guides.

Prerequisites :

To use Azure AI Content Understanding, you need:

- An active Azure subscription. Create one for free at azure.microsoft.com.

- An Azure AI Foundry resource in a supported region (e.g., westus, swedencentral, australiaeast).

- Permissions: Contributor or Owner role on the Azure subscription for resource creation.

- Tools: CURL or Postman for REST API interactions, or Azure AI Foundry portal for no-code setup.

Setup Steps :

Follow these steps to set up Azure AI Content Understanding in the Azure AI Foundry portal:

- Create an Azure AI Foundry Resource:

- Log in to the Azure portal.

- Navigate to Create a resource > AI Foundry > AI Foundry.

- Select a supported region (e.g., westus).

- Provide a resource name, subscription, and resource group.

- Review and create the resource.

- Create a Hub-Based Project:

- Go to the Azure AI Foundry home page.

- Select Create a hub-based project (Foundry projects are not supported).

- Choose a project name and region.

- Create a Content Understanding Task:

- In the Azure AI Foundry portal, select your hub-based project.

- Navigate to Content Understanding > + Create.

- Choose Single-file task (Standard Mode) or Multi-file task (Pro Mode).

- Enter a task name and optional description.

- Upload Sample Data:

- Upload a sample file (e.g., an invoice PDF, image, or audio file) to define the schema.

- Supported formats: PDF, docx, xlsx, pptx, msg, eml, rtf, html, md, xml, JPEG, PNG, MP3, MP4, etc. (up to 1 GB or 4 hours for audio/video).

- Define the Field Schema:

- Use the portal to create a schema or select a prebuilt analyzer template (e.g., invoice or document analyzer).

- Define fields to extract (e.g., “Invoice Number,” “Total Amount”) with clear descriptions.

- Example: For “Invoice Date,” specify “The date the invoice was issued, typically at the top right.”

- Save the schema.

- Build the Analyzer:

- Test the schema with sample data to verify output accuracy.

- Adjust the schema if needed, then select Build Analyzer to generate an API endpoint.

- Obtain API Credentials:

- From the Azure portal, retrieve the endpoint URL and API key for your Azure AI Foundry resource.

Usage Instructions :

Azure AI Content Understanding can be used via the Azure AI Foundry portal (no-code) or REST APIs (programmatic). Below are instructions for both.

Using the Azure AI Foundry Portal

- Access the Task:

- Navigate to your project in the Azure AI Foundry portal.

- Select the created Content Understanding task.

- Upload Content:

- Upload files (documents, images, audio, or video) for analysis.

- Use prebuilt templates for common scenarios (e.g., invoice analysis) or your custom schema.

- Review Output:

- View extracted fields, classifications, or generated insights (e.g., summaries).

- Download results in JSON or Markdown format for integration.

Using REST APIs

- Prepare the Request:

- Use the endpoint and key from your Azure AI Foundry resource.

- Example cURL command for a document analyzer:

curl -i -X POST "{endpoint}/contentunderstanding/analyzers/{analyzerId}/analyze?api-version=2025-05-01-preview" \

-H "Ocp-Apim-Subscription-Key: {key}" \

-H "Content-Type: application/json" \

-d '{"url": "https://github.com/Azure-Samples/azure-ai-content-understanding-python/raw/refs/heads/main/data/invoice.pdf"}'- Replace {endpoint}, {key}, and {analyzerId} (e.g., prebuilt-documentAnalyzer).

- Check Job Status:

- Retrieve the request-id from the POST response’s Operation-Location header.

- Poll the status using:

curl -i -X GET "{endpoint}/contentunderstanding/analyzerResults/{request-id}?api-version=2025-05-01-preview" \

-H "Ocp-Apim-Subscription-Key: {key}"- Wait until the status is Succeeded.

- Parse the Response:

- The JSON response includes extracted fields, confidence scores, and grounding details.

- Example response:

{

"id": "<request-id>",

"status": "Succeeded",

"result": {

"analyzerId": "prebuilt-documentAnalyzer",

"fields": {

"Summary": { "type": "string", "valueString": "This document is an invoice issued by Contoso Ltd." }

}

}

}Python Code

Below is a Python script to interact with the Content Understanding API, based on Azure-Samples.

import requests

import time

# Replace with your endpoint and key

endpoint = "https://<your-resource>.cognitiveservices.azure.com"

key = "<your-api-key>"

analyzer_id = "prebuilt-documentAnalyzer"

file_url = "https://github.com/Azure-Samples/azure-ai-content-understanding-python/raw/refs/heads/main/data/invoice.pdf"

# POST request to start analysis

url = f"{endpoint}/contentunderstanding/analyzers/{analyzer_id}/analyze?api-version=2025-05-01-preview"

headers = {

"Ocp-Apim-Subscription-Key": key,

"Content-Type": "application/json"

}

body = {"url": file_url}

response = requests.post(url, headers=headers, json=body)

operation_location = response.headers["Operation-Location"]

# Poll for results

while True:

response = requests.get(operation_location, headers={"Ocp-Apim-Subscription-Key": key})

result = response.json()

if result["status"] in ["Succeeded", "Failed"]:

break

time.sleep(1)

if result["status"] == "Succeeded":

print(result["result"]["fields"])

else:

print("Analysis failed:", result)Common Use Cases :

Azure AI Content Understanding is designed for various enterprise scenarios, including:

- Post-Call Analytics: Extracts insights from call center recordings or meeting transcripts, identifying sentiment, speakers, and key entities (e.g., names, companies).

- Media Asset Management: Extracts features from images and videos for better content searchability and management, such as identifying brands or settings.

- Financial Services: Automates processing of complex documents like contracts, loan applications, or financial reports by extracting key fields and clauses.

- Tax Processing: Simplifies data extraction from diverse tax forms to create consolidated views.

- Retrieval-Augmented Generation (RAG): Builds indexes from extracted image or document data to power chat-based applications.

- Website Personalization: Companies like WPP use it to transform static website content into dynamic, conversational interfaces by extracting data from text, PDFs, images, and videos.

Best Practices :

- Schema Design: Use precise field descriptions (e.g., “Customer Name: The name of the client, not the mailing address”).

- Confidence Thresholds: Set higher thresholds (e.g., 0.80) for critical fields like invoice totals and lower for non-critical fields like comments.

- Language Selection: For audio/video, limit transcription languages (e.g., English and Spanish only) to reduce errors.

- Preprocessing: Use high-resolution scans and preprocess data to remove noise for better accuracy.

- Batch Processing: Process files in batches to minimize API calls and costs.

- Testing: Test analyzers with diverse samples to ensure robustness.

Limitations :

- File Size: Up to 1 GB or 4 hours for audio/video.

- Video Processing: Frame sampling at ~1 FPS and 512×512 resolution may miss rapid motions or small text.

- Face Capabilities: Restricted to Microsoft-managed customers with registration via the Face Recognition intake form.

- Pro Mode: Limited to document inputs; multimodal support is planned.

- Preview Status: No SLA, and features may change before GA.

Pricing:

- Model: Pay-as-you-go, based on input (content extraction) and output (field extraction) costs.

- Free Trial: Available for up to 30 days with an Azure free account.

- Details: Visit the Azure AI Content Understanding pricing page for cost estimates.

Security and Compliance:

- Adheres to GDPR, HIPAA, and Microsoft’s Responsible AI principles.

- Data encryption and secure access controls are standard.

- Biometric data processing (e.g., face recognition) requires user consent and compliance with data protection laws.

- Content filtering prevents harmful content, with modified filtering available for approved customers via the Azure OpenAI Limited Access Review form.